Lindeberg's condition

In probability theory, Lindeberg's condition is a sufficient condition (and under certain conditions also a necessary condition) for the central limit theorem to hold for a sequence of independent random variables. Unlike the classical central limit theorem, which requires that the random variables in question to have finite mean and variance and be both independent and identically distributed, it only requires that they have finite mean and variance and be independent. It is named after the Finnish mathematician Jarl Waldemar Lindeberg.

Contents |

Statement

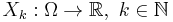

Let  be a probability space, and

be a probability space, and  , be independent random variables defined on that space. Assume the expected values

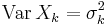

, be independent random variables defined on that space. Assume the expected values  and variances

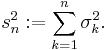

and variances  exist and are finite. Also let

exist and are finite. Also let

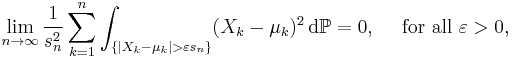

If this sequence of independent random variables  satisfies the Lindeberg's condition:

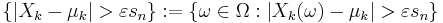

satisfies the Lindeberg's condition:

(where the integral is a Lebesgue integral over the set  ), then the central limit theorem holds, i.e. the random variables

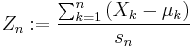

), then the central limit theorem holds, i.e. the random variables

converges in distribution to a standard normal random variable as

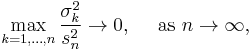

Lindeberg's condition is sufficient, but not in general necessary (i.e. the inverse implication does not hold in general). However, if the sequence of independent random variables in question satisfies

then Lindeberg's condition is both sufficient and necessary, i.e. it holds if and only if the result of central limit theorem holds.

Interpretation

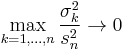

Because, the Lindeberg condition implies  as

as  , it guarantees that the contribution of any individual random variable

, it guarantees that the contribution of any individual random variable  (

( ) to the variance

) to the variance  is arbitrarily small, for sufficiently large values of

is arbitrarily small, for sufficiently large values of  .

.

See also

References

- P. Billingsley (1986). Probability and measure (2 ed.). p. 369.

- R. B. Ash (2000). Probability and measure theory (2 ed.). p. 307.

- S. I. Resnick (1999). A probability path. p. 314.